AI Agent Training: Complete Guide to Building & Training Custom Agents

Welcome to the future of AI, my friend. 🤖

Let's be real: tools like ChatGPT are amazing, but they're the starting pistol, not the finish line. When you're trying to nail a specific job, like B2B sales or automating a growth workflow, a generic AI just doesn't have the chops. You need a specialist—a custom-trained agent that doesn't just chat, but performs tasks, uses your tools, and thinks within your business logic.

The problem? The whole idea of AI agent training can feel like trying to drink from a firehose. Suddenly you're wrestling with data pipelines, model evaluations, infrastructure, and costs that can make your head spin. It's way too easy to get lost in the weeds before you've even built anything useful.

This guide is your roadmap. We're cutting through the noise to give you a production-minded, 10-step plan for training and deploying a custom agent that actually moves the needle.

Here's what you'll walk away with:

- How agents actually learn (and what "training" really means in 2026).

- The best frameworks and tools in the game right now.

- A step-by-step process with code snippets to get you building fast.

- Real-world best practices and pitfalls to sidestep.

- Case studies, including a deep dive into how sales agents like those used at gojiberry.ai are trained for growth.

The goal isn't just to build cool tech; it's to create a reliable, efficient teammate that accelerates your workflows. In a world of generic AI, a well-trained custom agent is your unfair advantage.

Ready to build an AI that can actually drive revenue? Let's get into it.

What Is AI Agent Training?

First, let's clear up a common misconception. When people hear "AI agent training," they often imagine building a new LLM from scratch. That's not what we're talking about.

Think of it more like onboarding a star employee. You aren't creating a human; you're teaching a capable individual your specific playbook, giving them the right tools, and showing them what success looks like. 🧑💻

For an AI agent, "training" is about shaping its behavior using several key levers:

- Instructions (Prompting): The agent's core "job description." A well-crafted prompt defines its role, rules, and goals.

- Knowledge (RAG): Giving the agent access to your private data (docs, wikis, CRM) so its answers are grounded in reality. This is done via Retrieval-Augmented Generation.

- Tools (Function Calling): Allowing the agent to interact with the outside world by calling APIs, browsing websites, or querying databases.

- Memory: Providing short-term context for a single task and long-term memory to recall past interactions.

- Feedback (Fine-Tuning & RLHF): Refining the agent's style, format, or decision-making process based on examples of "good" and "bad" outputs. This includes methods like Supervised Fine-Tuning (SFT) and Reinforcement Learning from Human Feedback (RLHF).

Why does this matter? Custom agents offer precision, reliability, and governance that generic models can't match. You control the data, the tools, and the rules of engagement.

The AI Agent Training Stack (Big Picture)

Before diving into the "how," it helps to see the big picture. Most articles jump straight to code, but understanding the architecture is a massive advantage. An AI agent isn't just a model; it's a system with several moving parts.

Here are the core components of a modern agentic stack:

- Base Model (LLM): The "brain" of the agent that handles reasoning and language generation (e.g., GPT-4, Llama 3).

- Tools: The agent's "hands." These are the APIs, functions, and web browsers it can use to take action.

- Memory: Its ability to recall information. This can be short-term (for the current conversation) or long-term (stored in a database).

- Knowledge: The external information store the agent can query, typically a RAG index built from your private documents.

- Policy: The set of instructions, rules, and constraints that govern the agent's behavior. This is often defined in a master prompt.

- Evaluations: The system for measuring the agent's performance, both before deployment (offline) and in production (online).

So, when we talk about AI agent training, we're really talking about optimizing how these components work together. The process is a continuous loop: the agent observes a task, thinks about a plan, acts using its tools, and learns from the evaluated outcome.

AI Agent Training Frameworks

You don't have to build this entire stack from scratch. Several powerful frameworks exist to help you orchestrate these components. Choosing the right one depends on your goal. Are you building a quick prototype or a production-grade system?

LangChain

This is the de facto standard for building agentic workflows. LangChain provides the glue to connect LLMs with tools, memory, and data sources. It offers pre-built patterns for complex tasks like tool calling and RAG.

- Best for: Rapidly building and prototyping agent workflows that rely heavily on RAG and tool use.

- Example: A customer support agent that can query a knowledge base and create a support ticket via an API.

AutoGPT / Agentic Runtimes

Frameworks like AutoGPT are designed for more autonomous agents. They excel at task decomposition—breaking down a big, ambiguous goal ("research competitors") into a series of smaller, executable steps.

- Strengths: High degree of autonomy.

- Risks: Can be unreliable and lead to spiraling costs if not carefully monitored.

- Best for: Experimentation and automating internal operations where you can tolerate some variability.

Hugging Face Transformers

If you need deep control over the model itself, Hugging Face is your go-to. The Transformers library gives you direct access to thousands of open-weight models that you can fine-tune for your specific needs.

- Best for: Custom model training, full control over the stack, and working with open-weight models. You'd choose this path when API-based solutions aren't flexible or secure enough.

OpenAI Fine-Tuning

When your goal is to shape the behavior of a powerful base model rather than just give it new knowledge, OpenAI's fine-tuning API is a strong choice. It's excellent for ensuring outputs have a consistent style, format, or tone.

- Best for: Nailing brand voice, generating consistently formatted JSON, or teaching the model a specific conversational style. It's often the right move when prompting and RAG alone can't achieve the required consistency.

10 Steps to Train an AI Agent

Alright, let's get tactical. This is the 10-step, production-minded blueprint for taking an agent from idea to deployment. For each step, we'll cover the goal, practical actions, the output you should have, and common pitfalls to avoid. 🚀

Step 1: Define the Agent’s Job (Scope + Success Metrics)

- Goal: Create a crystal-clear "job description" for your agent. Vague goals lead to useless agents.

- Define the specific, narrow task (e.g., "draft a personalized outreach email based on a LinkedIn profile").

- Specify the exact inputs (e.g., URL to a profile) and required outputs (e.g., a 75-word email in JSON format).

- Set measurable KPIs. Examples include accuracy rate, task completion time, conversion rate, or cost per task.

Step 2: Choose Your “Training Approach” (Prompt vs RAG vs Fine-Tune)

- Goal: Pick the simplest technical path that reliably meets your job requirements. Don't over-engineer it.

- Need private or real-time knowledge? → Start with RAG.

- Need a highly consistent format, style, or voice that prompting can't nail? → Add Fine-Tuning.

- Need to execute tasks in other systems (APIs, databases)? → Use an Agent Framework with Tool Calling.

- Output Artifact: A documented decision on your approach and a set of baseline metrics you aim to beat.

- Goal: Collect high-quality examples of the job done right. This is the fuel for your agent.

- Source data from chat logs, support tickets, internal docs, call transcripts, or CRM records.

- Focus on quality over quantity. 200 "golden" examples are better than 20,000 messy ones.

- Define a clear labeling strategy (e.g., what constitutes a "successful" outreach email?).

- Anonymize all Personally Identifiable Information (PII). This is non-negotiable.

Step 3: Gather Training Data (And What Counts as “Data”)

- Output Artifact: A curated and anonymized dataset.

- Goal: Get your raw data into a clean, machine-readable format for training and evaluation.

- Clean, deduplicate, and normalize the data.

- Split your data into

train,validation, andtestsets to prevent overfitting and evaluate performance honestly. - Consider synthetic data generation carefully, but only for well-understood tasks where quality can be controlled.

Step 4: Prepare & Structure the Data

- Output Artifact: A structured dataset (e.g., in JSONL or CSV format) with a clear schema.

- Goal: Select the right LLM "engine" for your agent based on your specific needs.

- Performance vs. Cost vs. Latency: Larger models are more capable but slower and more expensive.

- Open Weights vs. API: Self-hosting an open-weight model gives you control, while an API is easier to start with.

- Multi-modal Needs: Do you need to process images or audio in addition to text?

Step 5: Pick a Base Model (Constraints + Tradeoffs)

- Output Artifact: A chosen base model and a justification for the choice.

- Goal: Create a reproducible and version-controlled environment for your development work.

- Choose your platform: local machine for simple tasks, or a cloud service like Google Colab, AWS SageMaker, or Azure ML for serious projects.

- Set up a version control system (like Git) for your code, prompts, and configurations.

- Use a checklist to ensure your setup is reproducible.

Step 6: Set Up the Training Environment

- Output Artifact: A version-controlled project repository with environment setup scripts.

- Goal: Shape the agent's behavior using prompts, tools, and a knowledge base.

- Write a detailed master prompt that defines the agent's persona, rules, and workflow.

- Build your RAG index by loading your documents into a vector database.

- Define clear schemas for any tools the agent will use (e.g., OpenAPI spec for an API).

Step 7: Train the Agent (Two Tracks)

This is where you execute the approach you chose in Step 2.

Track A: Agent Behavior without Fine-Tuning

- Output Artifact: A master prompt file and a queryable RAG index.

- Goal: Refine the base model's behavior using your curated dataset.

- Format your dataset into the required structure (usually prompt/completion pairs in JSONL).

- Use the platform's SDK or CLI to launch the training job.

- Output Artifact: A new, fine-tuned model ID.

- Goal: Rigorously test your agent to see if it meets your quality bar before it interacts with real users.

- Offline Evaluation: Test the agent against your holdout

testdataset. Measure KPIs like accuracy, format compliance, and factual grounding. - Online Evaluation: Once it passes offline tests, A/B test the agent in a controlled, live environment. Capture user feedback and monitor for performance drift.

- Create an error taxonomy to classify failures (e.g., hallucination, tool misuse, refusal).

Step 8: Evaluate Performance (Offline + Online)

- Output Artifact: An evaluation script (harness) and a performance report.

- Goal: Improve the agent's performance based on your evaluation results.

- If fine-tuning, you might tune hyperparameters like the learning rate.

- More commonly, you'll iterate on your prompt, improve your RAG documents, or add better examples to your dataset.

- Optimize for cost by experimenting with smaller models, caching results, or implementing an agent router.

Step 9: Optimize & Iterate

- Output Artifact: An updated agent with improved performance metrics.

- Goal: Ship your agent to production and ensure it remains reliable over time.

- Choose a deployment pattern (e.g., API endpoint, serverless function, container).

- Set up a monitoring dashboard to track latency, cost, and quality metrics in real-time.

- Define triggers for retraining (e.g., a drop in accuracy, a new type of user request) to kick off the improvement cycle again.

Step 10 — Deploy, Monitor, Retrain

Once your agent is performing well in evaluation, the real work starts: shipping it into production without letting quality decay over time. The output you’re aiming for here is simple and concrete: a stable API endpoint that serves the agent, plus a monitoring dashboard that tells you—at a glance—whether it’s still behaving the way you expect.

A strong production setup starts with better evaluation data, not more data. Instead of collecting a massive noisy dataset, curate “gold datasets”: smaller sets of perfect, representative examples that define what “good” looks like. Keep strict separation between training and testing to avoid data leakage—your test set must be truly unseen during training. To keep labeling consistent (especially across multiple reviewers), write a clear labeling rubric that defines the standards and edge cases.

From there, you need an evaluation harness that runs regression tests automatically. Every prompt change, RAG update, tool change, or model swap should trigger the same suite of tests so you can detect quality regressions immediately. Reliability also means stress testing: actively try to break the agent with adversarial prompts and jailbreak attempts. If you’re using RAG, you should also verify grounding—answers must be supported by retrieved documents, and citations should be included when possible.

In production, feedback is your sensor. Even a simple thumbs up/down signal gives you a fast way to spot failure patterns. The best teams implement active learning: when the agent is uncertain or wrong, those cases get flagged for human review, corrected, and fed back into the next training cycle. Retraining shouldn’t be a panic response; schedule it regularly to prevent drift as your product, docs, and user behavior evolve.

Governance matters too. Scan data and outputs for PII and bias, and keep audit logs of actions and decisions for debugging and accountability. Most importantly, lock down permissions: define exactly which tools and data the agent can access. Never give broad access “just in case.” In practice, scope control is one of the biggest predictors of whether an agent stays safe and cost-effective in the real world.

To sanity-check readiness, you want clear success metrics, anonymized training data, automated evaluations, explicit safety testing, a human escalation path, real-time monitoring for cost and latency, a retraining plan, strict access controls, and detailed logs. If any of those are missing, you’re not truly production-ready—yet.

Production Case Studies (What Works in the Real World)

One SaaS support team wanted to reduce ticket resolution time and improve CSAT by automating answers to common questions. They used a RAG + tool-calling approach: the agent was grounded in the internal knowledge base and could safely check subscription status or open a ticket when needed. Within three months they saw a 28% reduction in average resolution time and a 12-point lift in CSAT. The biggest lesson was that grounding almost eliminated hallucinations, but the real trust-builder was a reliable “human handoff” when the agent got stuck.

A marketing team needed high-volume, on-brand content without having editors review every draft. They chose fine-tuning and built a gold-standard dataset of 1,500 examples from their best-performing copy. After fine-tuning a mid-sized model, content velocity increased by 40% and editor-rated quality jumped from 7/10 with a generic model to 9/10. Their main takeaway was that fine-tuning beat prompting for brand voice, and a smaller specialized model was cheaper to run than a large general model.

A growth startup wanted to personalize outreach at scale and improve reply rates with high-intent prospects. They implemented a hybrid system inspired by platforms like gojiberry.ai: RAG pulled real-time buying signals and prospect context, while a fine-tuned model generated the outreach. Guardrails and an evaluation layer reviewed every message before sending. The result was a 75% lift in reply rate versus their previous template-based approach. The key driver was the quality of labeled training data (successful vs. unsuccessful messages), and the non-negotiable was a rigorous evaluation harness to protect brand reputation and prevent drift.

A BI team wanted non-technical stakeholders to query company data in plain English. They used advanced tool calling so the agent could translate questions into SQL, execute queries against a read-only database, and summarize results. This reduced ad-hoc data requests to the BI team by 60%. The biggest lesson was evaluation safety: every generated SQL query was tested in a sandbox first to prevent errors and ensure data security.

Tooling Stack (Short, Practical)

For orchestration, LangChain is a common choice for composing agentic workflows. For open-weight model training and control, Hugging Face Transformers is the standard option. If you prefer API-based power with fine-tuning, the OpenAI API is a strong default. For labeling and annotation, platforms like Labelbox and Scale AI offer end-to-end tooling, while Prodigy is popular for developer-driven workflows. For vision labeling specifically, Roboflow is a typical go-to.

On training infrastructure, Colab works well for early prototyping, while AWS SageMaker, Azure ML, and Paperspace are common when you move into serious jobs. For experiment tracking, Weights & Biases, MLflow, and Neptune are widely used. For evaluation, many teams still build custom harnesses, but dedicated LLM evaluation tooling is rapidly maturing.

How Trained Agents Drive Growth (Business Value)

For growth teams, trained agents are a force multiplier because they enable personalization at scale, automate repetitive top-of-funnel workflows like research and qualification, shorten iteration loops for testing new segments or offers, and extract predictive insights from interaction data (like who is most likely to convert or churn). Systems built for sales and growth—like the AI-driven workflows at gojiberry.ai—package these principles into production-ready pipelines that prioritize high-intent prospects and deliver more qualified pipeline faster.

Common Mistakes to Avoid

Most failures come from predictable traps: starting with bad data, overfitting to a single offline metric, using a weak evaluation setup without a true holdout test set, ignoring drift after launch, missing bias/PII risks, or shipping an “autonomous” agent with broad permissions and no guardrails. In production, these mistakes don’t just hurt quality—they create compliance, cost, and brand-risk issues fast.

FAQ

Let's tackle a few more common questions that come up when teams start their AI agent training journey.

How much data do I need?

It depends on your approach! For RAG, a few dozen high-quality documents can be enough to start. For fine-tuning, you'll typically want at least a few hundred "golden" examples, but quality always trumps quantity. Start small and iterate.

How long does it take?

A simple RAG agent can be prototyped in a day. A production-grade system with fine-tuning and rigorous evaluation can take several weeks or months. Data preparation is almost always the longest part of the process.

What does it cost?

Costs fall into three buckets: data preparation (can be high if you use labeling services), model training (GPU costs for fine-tuning), and inference (ongoing API calls or infrastructure costs). A simple API-based agent can be very cheap, while self-hosting a large model can be expensive.

Can I do it without coding?

While low-code and no-code platforms are emerging, building a truly custom, reliable agent still requires coding knowledge, particularly in Python. Frameworks like LangChain significantly reduce the amount of boilerplate code you need to write.

How do I evaluate reliability?

Through a combination of offline and online testing. You need an automated evaluation harness to check for accuracy and format compliance, and you need to monitor the agent's performance with real users to catch issues you didn't anticipate.

What’s next in AI agent training?

The field is moving toward more sophisticated multi-agent systems, where different specialized agents collaborate to solve complex problems. We're also seeing major advances in on-device agents and more robust evaluation frameworks.

You’ve just absorbed the entire playbook for building and training custom AI agents in 2026. You have the steps, the best practices, and the real-world examples. So, what now?

The key takeaway is this: start with the simplest approach that meets your reliability needs. Fight the urge to over-engineer. A well-crafted prompt combined with a solid RAG implementation is often more effective and easier to maintain than a complex fine-tuned model.

Your next step is to pick one narrow, high-value use case and build an evaluation harness for it first. Define what "good" looks like before you write a single line of agent code. This disciplined approach is what separates a cool tech demo from a system that drives real business results.

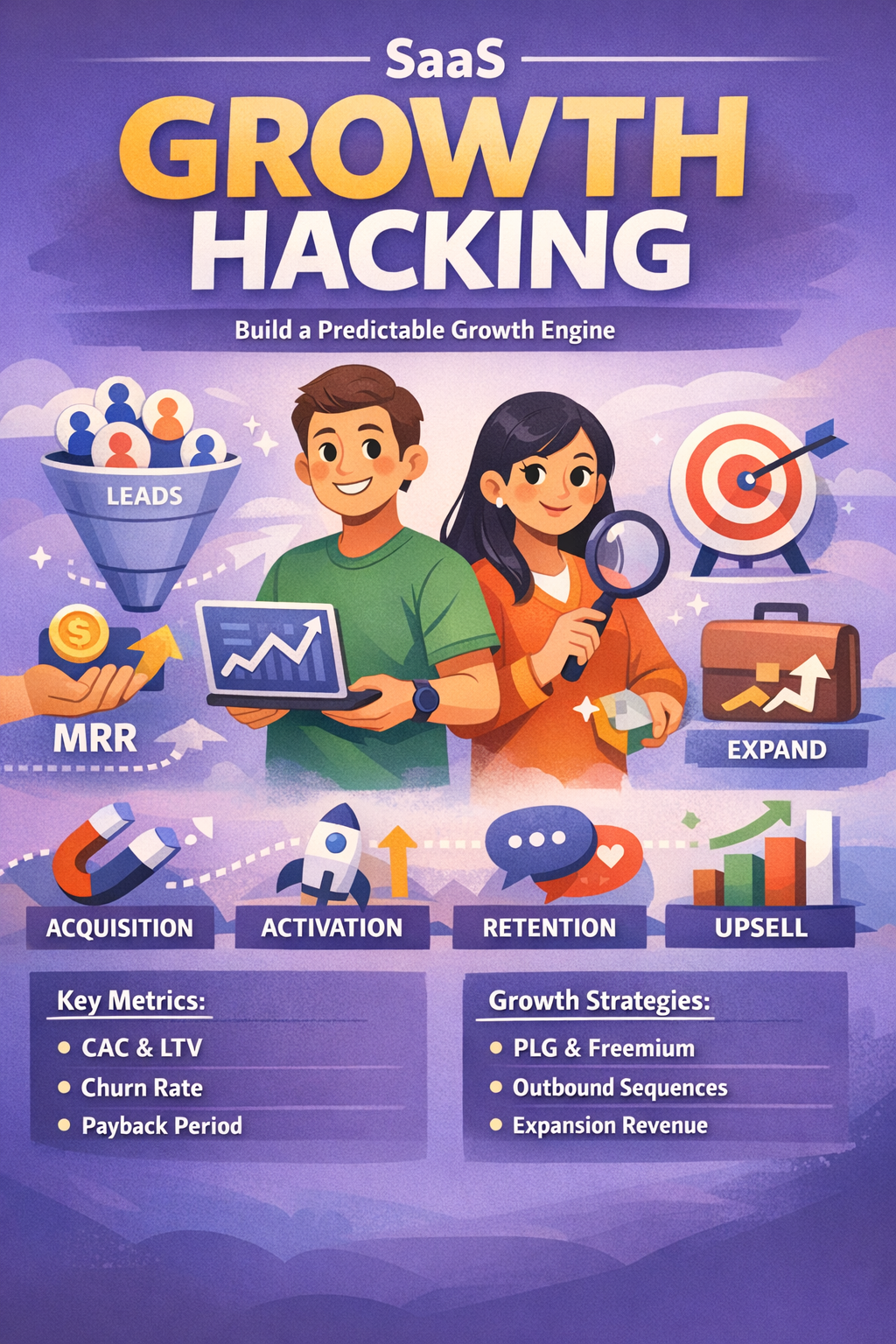

Trained agents are a core part of the modern tech stack. They are how today's fastest-growing companies scale their operations and create a competitive edge. For more ideas on how to apply these powerful concepts, our growth hacking guide is a great place to continue your journey.

And if you want to see how trained agents can specifically accelerate your growth workflows, systems like gojiberry.ai show what’s possible when these principles are applied to solve the toughest challenges in B2B sales and marketing.

More High-Intent Leads = Your New Growth Engine.

Start Now and Get New High Intent Leads DeliveredStraight to Slack or Your Inbox.